The new OpenAI o1 language model by OpenAI suggests is the enhancement of step-wise decision is seemingly not present in text-davinci-002 in addition to more. Even though the model was trained from scratch using the most common step-by-step inference approach, the enhancement is most probably attributed to several other factors.

Recently, the scientists of Epoch AI tried to achieve the same level of success as OpenAI o1-preview in the comparative examination using the tough scientific multiple-choice test known as GPQA (Graduate-Level Google-Proof Q&A Benchmark).

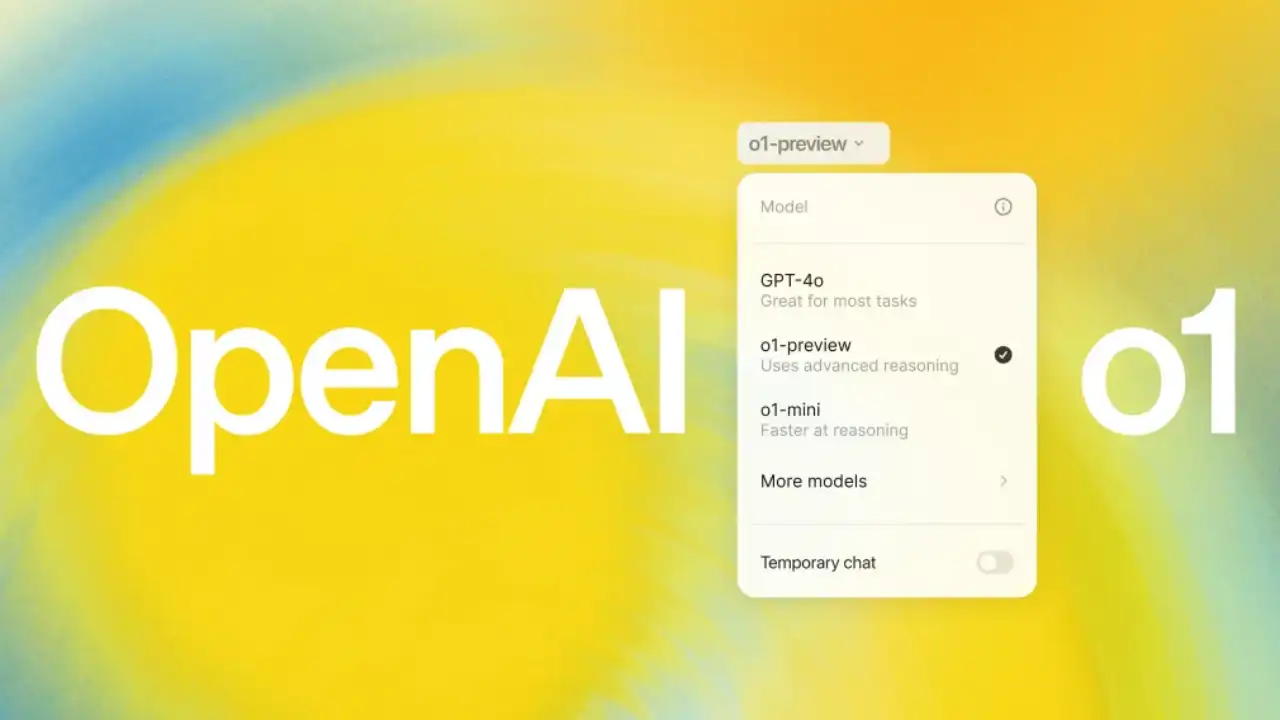

OpenAI o1 Reasoning Prompting Software

As to the results of the experiment, the authors concluded that increasing token production gave a boost but no number of tokens could match o1-preview’s performance. GPT-4o variants also had more errors even with more token count than the o1-preview model’s score.

However, the performance gap remained consistent even when compared based on cost per token considering OpenAI o1-preview was more expensive. Epoch AI’s extrapolation shows that using $1,000 of output tokens with GPT-4o is still 11.6% less accurate than o1-preview.

From this, the researchers posit that the fact that one can scale up the inference processing power as a way of generating increased power to achieve above-par results like o1. They claim that more sophisticated approaches in reinforcement learning and fine-grained search methods can be expected to be a dominating influential factor, pointing to algorithmic research as the core of AI development.

The authors of the study also want to mention that the results obtained do not suggest proving that network algorithm enhancements are solely and consistently the only reason for o1-preview’s superiority to GPT-4o.

Since o1 has been trained on correct reasoning paths, which has not been done for o2, it may also be better at following through learned logical steps that lead to correct solutions faster hence it optimizes on the available computer power.

Independently, researchers at Arizona State University establish that although the planning task o1 demonstrates considerable improvement, it still contains the tendency to mistakes.

They were found to have improved performance on logical reasoning tasks, but OpenAI o1 provided no guarantee of correct solutions according to their study. On the other hand, historical planning algorithms offered perfectly accurate solutions with shorter computation time and at lower expense.