Meta, owned by Mark Zuckerberg, has introduced Meta 3D Gen, a tool to generate 3D models by writing descriptions in less than a minute. Concepts ranging from hyper-realistic video games to the Hulk in the Avengers movies are also examples of 3D assets which are digital files that represent an object in three-dimensional space.

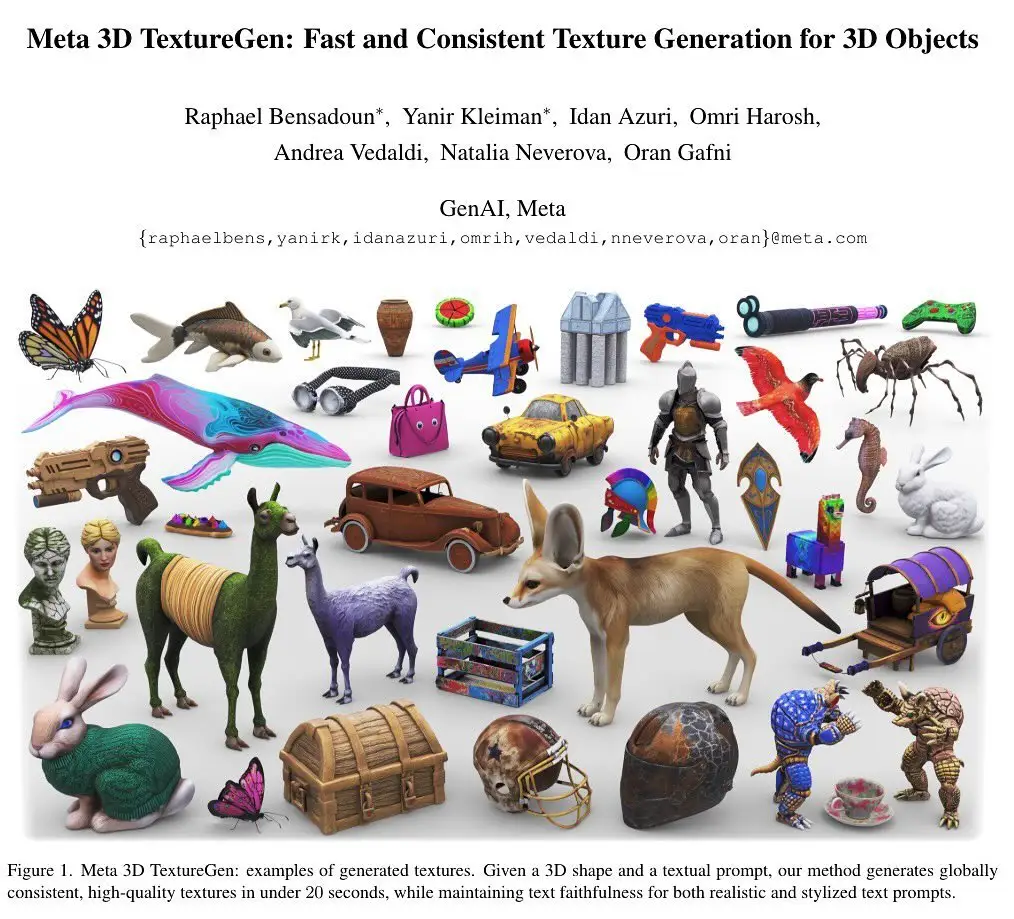

Meta 3D Gen (3D Gen) can generate 3D assets and textures from a single text input within a minute, according to Meta’s research paper.

Features of the AI 3D Generator

This is comparable to text-to-image generators such as Midjourney and Adobe Firefly but 3D Gen synthesizes fully three-dimensional models with underlying mesh that supports physically based rendering (PBR).

This implies that the 3D models created by Meta 3D Gen can be applied in real-life modeling and realistic rendering.

3D Gen will be able to “support generative retexturing of previously generated (or artist-created) 3D shapes using additional textual inputs provided by the user.” This means that users will be able to get the AI to generate 3D images that they like from the given textual inputs.

How to use AI 3D generating tool

Once the user enters a text prompt, AssetGen generates the first 3D asset with a texture and PBR. The latter takes roughly 30 seconds. Following this, TextureGen generates a higher quality texture and PBR maps for this asset given the prompt which takes around 20 seconds.

The 3D Gen integrates two basic generative models of Meta, namely AssetGen and TextureGen, which are designed to take advantage of the respective strengths.

Meta claims, citing surveys among professional 3D artists, that 3D Gen is chosen over competing text-to-3D options “most of the time” and is three to 60 times faster.