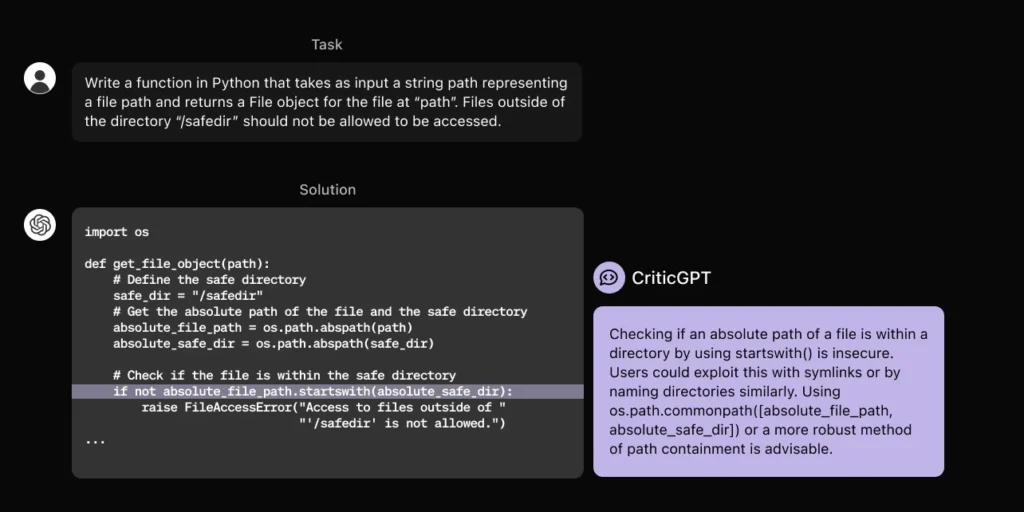

On Thursday, OpenAI released a research paper about a new AI that can detect errors made by GPT-4 in code generation. The AI firm said that the new chatbot was developed by using reinforcement learning from human feedback (RLHF) and was based on one of the GPT-4 models. The under-development chatbot is intended to enhance the quality of the generated code as seen in the large language models. Currently, the model is not live for users or testers to see it in action. Some of the drawbacks pointed out by OpenAI include:

CriticGPT built using RLHF

CriticGPT will probably be integrated into OpenAI’s RLHF labeling pipeline of OpenAI. There is an assumption that the company’s goal is to give AI trainers better tools to assess intricate AI-generated outcomes. GPT-4 models used in ChatGPT are designed to improve interactivity and practicality by leveraging RLHF technology (Response Learning from Human Feedback). This involves the trainers assessing and sorting different responses that have been generated to enhance their quality. With enhanced capabilities in reasoning, ChatGPT is making mistakes in more complex ways making it a bit challenging for trainers to track its mistakes.

How does CriticGPT spot the errors

One of the main components of RLHF is collecting pairs of examples where individuals known as AI trainers, compare different ChatGPT responses. With CriticGPT, OpenAI can critique ChatGPT’s answers independently, which is a solution to the problem of the AI chatbot growing too complex for most individuals to train it.

CriticGPT was trained using trainers’ feedback after inserting errors into the code written by ChatGPT on purpose. The outcomes were positive, as trainers chose CriticGPT’s critiques 63 percent of the time due to a decrease in nitpicks and hallucinations.

However, there are some limitations to the proposed model. CriticGPT was pre-trained on short strings of code produced by OpenAI. The proposed model cannot be trained to solve long and complex sets of tasks at the current stage. The AI firm also discovered that even with the new chatbot, it still hallucinates.