OpenAI has made several new developer announcements, such as the availability of the new OpenAI o1 model, improvements on the Realtime API, a new fine-tuning technique, and extra SDKs. These updates are to attend to the model performance, the number of options, and the cost for developers who use AI.

However, there are restrictions on its use, and the full version of the OpenAI o1 reasoning model is claimed to be very costly to implement due to its large power demands as a system. It costs $15 for every 750,000 words analyzed, it says, and $60 for every 750,000 words it produces. That makes it almost four times as expensive at £115 per megawatt hour compared to the more commonly used GPT-4o model.

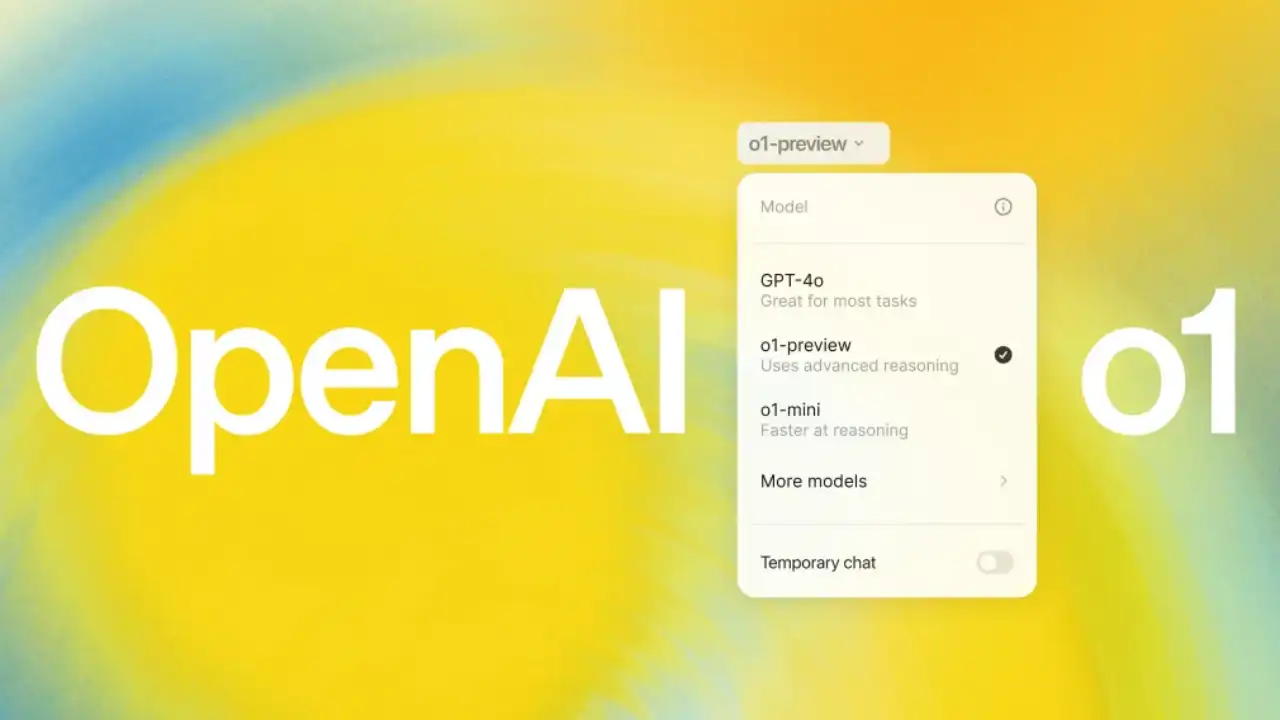

In detail, the OpenAI o1 model is a production-ready model that was developed specifically for reasoning tasks across multiple steps. This is an improvement of the previous O1-preview model with substantial enhancements in reliability, cheaper costs, and reduced time delays.

Major features of OpenAI’s O1 API

OpenAI o1 in the OpenAI API is much more versatile than the OpenAI o1-preview due to new features such as a function call—which means that the model can be connected to other data; developer messages, with which developers can guide the model on the chosen tone and style; as well as an image analysis feature. However, o1 does have an API parameter, named “reasoning_effort,” which allows for control over how long the model takes to answer a query.

- Function calling: With the help of WebService and XML data available at K1, the solution shall integrate at a much higher level with required external data and APIs.

- Structured Outputs: It also complies with custom JSON schemas with a greater degree of reliability.

- Developer messages: Two dynamic functionalities of ‘Tone,’ ‘Style,’ and ‘Behavioral Guidance’ that allowed it to be made ‘Customization.’

- Vision capabilities: image reasoning for kids in science, technical drawing for manufacturing, and coding.

- Lower latency: This was achieved by having only 40% of token usage as compared to OpenAI o1-preview.

- New parameters include the reasoning_effort, where developers can balance response times.

Realtime API updates

The Realtime API is an API that enables voice conversation functions using artificial intelligence. For example, WebRTC capability is now integrated into the Realtime API in order to enable voice conversation applications across both web-based applications, smartphone devices, and IoT systems. The following is a document on practical ways of using WebRTC together with the Realtime API.

Preference Fine-tuning

Preference fine-tuning is a particular model fine-tuning technique that is done using the Direct Preference Optimization (DPO) method. In light of this, we can ‘output two preferential responses of the model and let it learn between prefer and no prefer.’ This brings the advantage of somewhat fine-tuning as per the user or the developer’s choice.

Direct preference optimization

If developers want to tinker with their AI models even further, there is a new feature called “direct preference optimization.” Since its existing methods are utilized in supervised fine-tuning, the developers are expected to define sample inputs and their corresponding outputs.

So about the real-time API, there is one in beta, but it has got a few new features, such as concurrent out-of-band responses, which allow tasks to occur in the background, for example, content filtering and the like are running in the background and do not disrupt interactions. The API now also supports WebRTC, which is the open standard to build real-time voice applications for browser-based clients, smartphones, or devices on the Internet.